Release Notes

Below is a list of the various changes that have been made to the supercomputing clusters that we want you to be aware of. This page is intended to be updated whenever something new is added so you can keep up to date on what we've been working on.

Run into any new problems, or have any questions about the changes made? Contact the HPC Team or stop by our Schofield Hall 134 office.

Clusters / Services:

- BOSE: bose.hpc.uwec.edu

- BGSC: bgsc.hpc.uwec.edu

- OnDemand: ondemand.hpc.uwec.edu

- JupyterHub: jupyter.hpc.uwec.edu

- Metrics: metrics.hpc.uwec.edu

Jump To Section

2026: Winterim | Spring

2025: Winterim | Summer | Fall

2024: Winterim | Spring | Summer | Fall

2023: Winterim | Spring | Summer | Fall

Spring 2026

February 13th, 2026

New Software - Bowtie2 2.5.4

Bowtie2 2.5.4 has been installed on BOSE.

Links: Bowtie2 Guide

February 5th, 2026

New Software - SAMtools 1.23

SAMtools 1.23 has been installed on BOSE.

Links: SAMtools Guide

February 4th, 2026

New Software - FastQC 0.12.1

FastQC 0.12.1 has been installed on BOSE.

Links: FastQC Guide

New Software - MEGAHIT 1.2.9

MEGAHIT 1.2.8 has been intsalled on BOSE.

Links: MEGAHIT Guide

New Software - Sickle 1.33

Sickle 1.33 has been installed on BOSE.

Links: Sickle Guide

January 26th, 2026

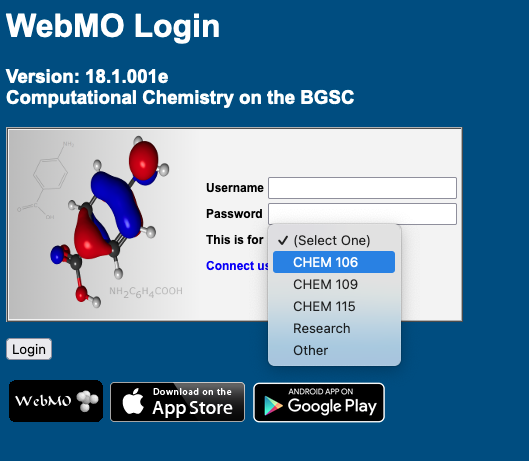

WebMO on BOSE - Officially Released

After hosting WebMO on the BGSC cluster for many years, which has been a big benefit to our Chemistry classes, we have finally moved it to the BOSE cluster. This allows for greater capacity and support for more complex calculations due to the increase in available resources.

This version also introduces:

- New login process to bring it in line with other HPC-supported applications

- Upgrade from version 18 to version 24

- Support for jobs requiring higher amounts of memory and/or GPU access

Links: WebMO (New Site) | WebMO Guide | WebMO (Official Site)

Winterim 2026

January 21st, 2026

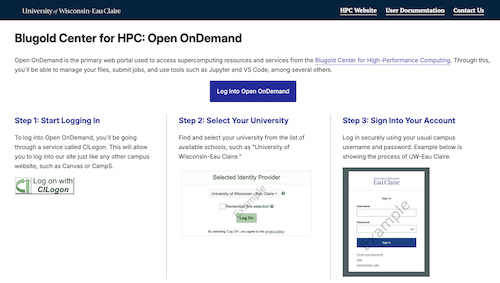

OnDemand: New Login Process

Open OnDemand has been updated with a whole new login process to support accounts from other UW Campuses.

Links: Open OnDemand

Updated Software: RStudio

VS Code / Code Server on BOSE has been updated to version 2025.09.1 with R 4.5.2 and is now considered officially released in Open OnDemand. Besides dropping the preview flag, this also allows you to choose which version you'd like to use.

Links: RStudio

January 14th, 2026

Updated Software: MedeA 3.12.0

MedeA has been updated to version 3.12.0 on BOSE.

January 2nd, 2026

BOSE: CUDA 12.9

CUDA version 12.9 has been rolled out on all GPU nodes and the toolkit is available under the cuda/12.9 module. Any existing Python libraries and scripts may need to be updated to take full usage of new features.

BOSE: Cluster-Wide Commands

Commands that used to only run on the login node have been made available cluster wide and can now be used in interactive mode and desktop mode. This includes commands such as myaccounts, myjobreport, myjobs, myqos, myquota, and savail.

Fall 2025

November 21, 2025

Updated Software: Firefox 145

The built in Firefox available in Desktop Mode has been upgraded to version 145. This is a huge improvement from the original version 91 (released in 2021), which will bring in support for many recent techologies, such as the ability to run the chat UI for llama.cpp.

Updated Software: Llama.cpp b1726

Llama.cpp b1726 has been installed on BOSE.

Links: Llama.cpp Guide

November 13, 2025

New Software: Ollama 0.12.10

Ollama 0.12.10 has been installed on BOSE. This gives the ability to run the gpt-oss:20b and 120b models.

Links: Ollama Guide

November 6, 2025

New Software: Chemprop 2.2.1

Chemprop 2.2.1 has been installed on BOSE.

Links: Chemprop Guide

October 13, 2025

Updated Software: Gurobi Optimizer 12.0.3

New version of the Gurobi software has been installed, along with a dedicated version for the Python API.

Links: Gurobi Guide | Gurobi Python API Guide

Summer 2025

August 29th, 2025

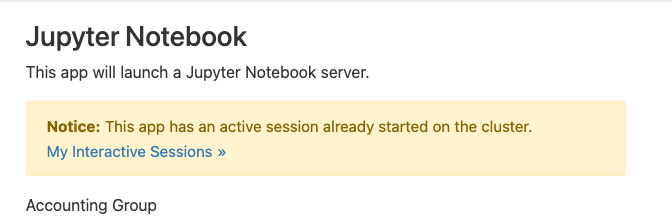

OnDemand: All Interactive Apps - Active Session

All interactive apps have been updated to indicate that an active session was already started for that application. This makes it easier for everyone to connect to an existing session, such as Jupyter or Desktop, without starting up another one.

OnDemand: Jupyter - Additional Software Modules

The Jupyter application within Open OnDemand has been updated with a new field to add additional software modules. This would be used if you want to use other modules, such as Ollama, in your Jupyter notebook or terminal.

August 12th, 2025

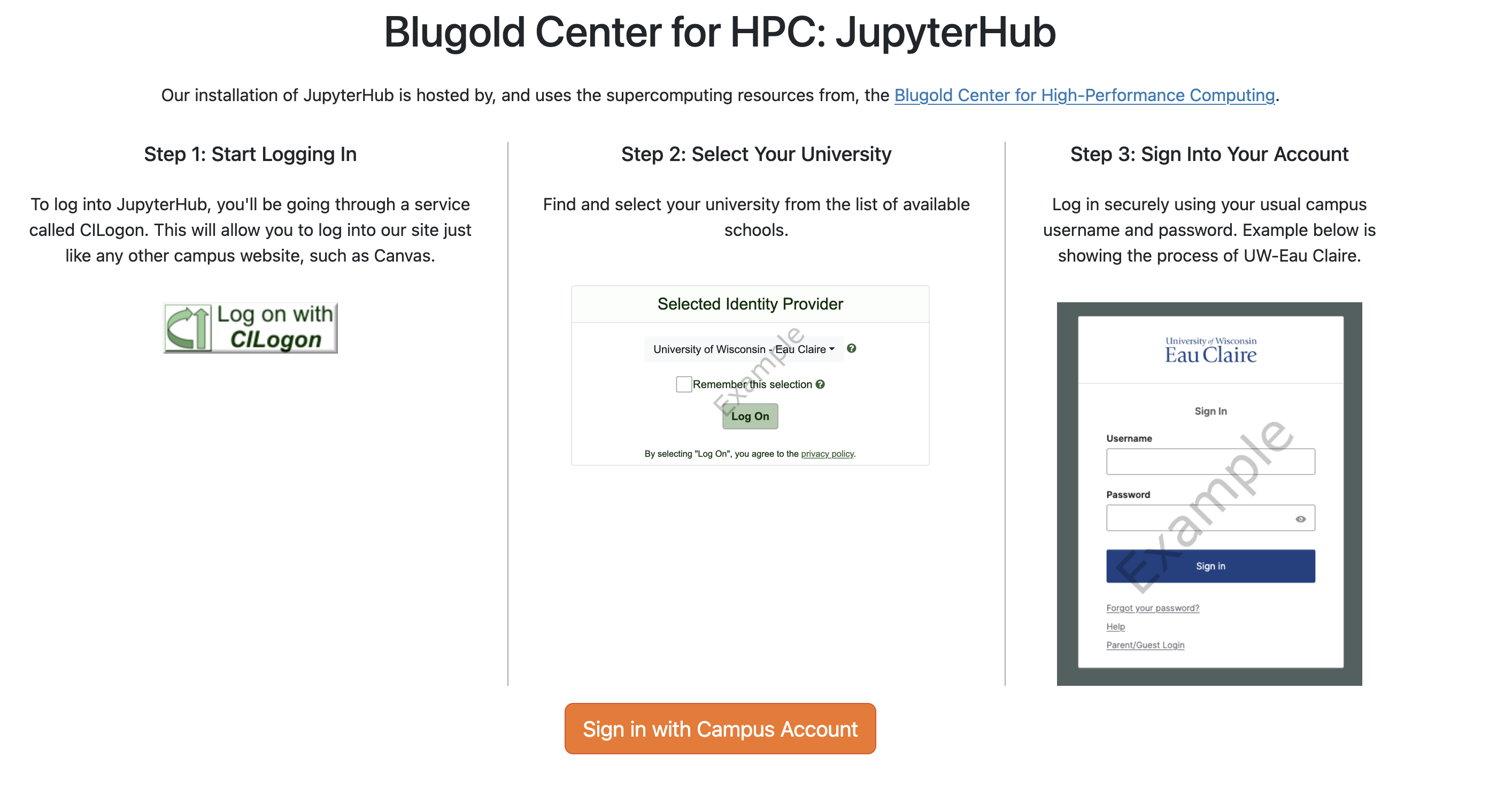

JupyterHub + NBGrader - New Tool (In Testing)

We're pleased to announce the installation of a dedicated version of JupyterHub with NBGrader installed! This has been requested by faculty in the past, and it has now been set up to support a couple of courses this fall as a trial. More information will be coming once it's officially released.

Links: JupyterHub (HPC) | JupyterHub (Official Docs) | NBGrader (Official Docs)

WebMO on BOSE (In Testing)

After hosting WebMO on the BGSC cluster for many years, we are finally making progress on upgrading and moving WebMO to the BOSE cluster. This'll allow for greater capacity and support for more complex calculations due to the increase in available resources.

WebMO is still undergoing testing to ensure everything is working as expected, including a few new custom features that we've added. Once WebMO is ready to be released, information will be sent out to all impacted faculty due to its use in the classroom and deprecation of the BGSC version. If you'd like to assist in the testing process, please contact us.

Links: WebMO (HPC - BGSC) | WebMO (Official Site)

Support for CILogon

The installation of JupyterHub and WebMO on BOSE also introduces a brand new way to log into our cluster. Going forward, all web apps (where possible) are integrated with CILogon, which not only supports UW-Eau Claire accounts, but also faculty and students from other campuses as well starting with UW-La Crosse. This is a huge step forward in our ability to support classes and research collaborators from other universities.

July 18th, 2025

sinteract - Updated defaults

The sinteract command has been updated to default to four cores instead of one core, and remains at a time limit of eight hours.

You can learn more about the command and how to override its default settings by checking out our Interactive Mode guide.

July 14th, 2025

New Software: JAGS 4.3.2

Just Another Gibbs Sampler (JAGS) 4.3.2 has been installed on BOSE.

Links: JAGS Guide

June 11th, 2025

New GPU Node - H100 Installation

A new H100 GPU server has been installed in BOSE and contains 2x94GB H100 cards. This is a limited-access server that is prioritized for the Computer Science department and is configurable to focus on capacity vs performance based on different needs. Work is still ongoing to get it fully up and running.

Links: NVIDIA H100 | H100 Usage Guide

Winterim 2025

January 16th, 2025

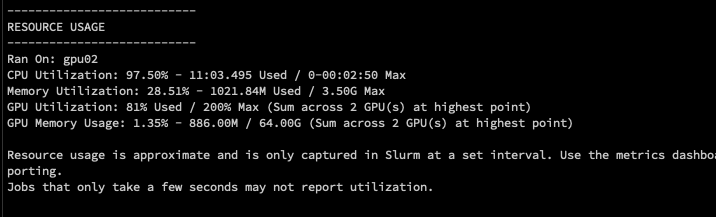

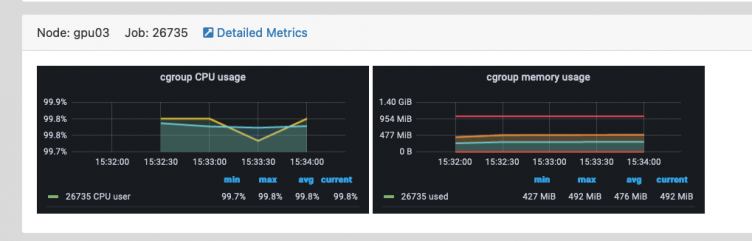

BOSE: myjobreport updated to show GPU statistics

The myjobreport JOBID command has been upgraded (as well as Slurm's email notification) to now include the following two statistics for jobs after they are completed:

- GPU Utilization (%) - How much of the GPU's processing power was utilized.

- GPU Memory Usage - How much virtual memory was used on the cards. (Each of our current NVIDIA V100S GPU cards have 32GB of memory)

Note that these statistics only show the highest amount of usage across all requested GPU cards at one time, so you'll want to review the metrics dashboard further down in the report to see usage across the entire time your script was running.

Also with this update, the URLs to the real-time metric dashboards now only show the GPU cards you used for your job. Previously all three cards on a node were shown in the graphs, even if you only requested one or two cards.

January 3rd, 2025 (BOSE Maintenance)

BOSE: Support for GPU sharding added (Experimental)

Experimental

This is a brand new feature that we are experimenting with for the upcoming Spring semester.

One of the biggest challenges that we've been facing recently is that we are limited on the number of GPU cards available for our classrooms and researchers. Having only 12 GPU cards across four nodes means that we've been having to compromise and balance supporting classes that need GPUs alongside jobs from our research groups, limiting how much work can be done.

We're attempting to work around this by implementing one of Slurm's features called "GPU Sharding", which allows us to run multiple jobs on the same GPU cards, taking advantage of smaller programs that only need a portion of a GPU to run. This is especially useful for our classes which can vary in number of students.

Each node can be split up into 24 shards (8 per GPU card), and a node that is set aside to use sharding will not run a normal GPU job at the same time. The reverse is true as well, that once a node runs a normal GPU job, it will not be available for sharded jobs.

To Use:

- Partition must be set to "GPU": (

#SBATCH --partition="GPU") - Set the number of shards you want to use: (

#SBATCH --gres=shard:1) for a max of 24. - You must not be using

--gpus=Xor--gres/gpus=X

Note: There are no limitations or separation on GPU utilization or memory usage between jobs running on the same GPU card at this time. We also have not updated our reporting tools to take into account GPU sharding, which will be implemented over time.

Further information and instructions will be coming once we work with some of our faculty members on trying out the new feature.

BOSE: Improved GPU statistics with Slurm

Our installation of Slurm is now tracking GPU utilization and memory on jobs! This means that researchers are no longer required to use nvidia-smi on a GPU node while their job is running to know if a GPU card is being properly used.

Running Jobs:

Finished Jobs:

- gres/gpumem = Total GPU memory usage across all GPU cards

- gres/gpuutil = Total GPU utilization across all GPU cards

Links: sstat Documentation | sacct Documentation

Fall 2024

November 26th, 2024

BOSE/BGSC: Autocompletion for myjobreport, myjoblogin, and scancel

Are you tired of having to use myjobs and copying your job IDs to use myjoblogin, myjobreport, or scancel? Ctl+Shift+C no more, as all three tools now feature bash autocompletion, allowing you to use <tab> and automagically fill in your job IDs.

scancel & myjoblogin

Try hitting <tab> to automagically fill in your running job's id or show possible completions if you have multiple jobs.

myjobreport

Hitting <tab> will autocmplete or show the options for any job ran in the last week or in progress currently.

November 6th, 2024

Simplified savail

By default, savail now groups like nodes in the form of cn[04-41] instead of listing the status of every node on the cluster.

If you prefer the old format of savail, use savail -a or savail --all to get the comprehensive list.

September 18th, 2024

BOSE: Integration with Open Science Pool (OSPool)

Were you looking at squeue recently and were wondering who this "osg" person is that is constantly running a small number of jobs? This is from our brand new integration with the OSPool, a free distributed computing service under the OSG Consortium for researchers. Joining alongside 60+ other institutions and computing centers all over the country, we are now contributing a portion of our computing resources to support researchers on a national scale.

Check out the page below to see all the projects we've already supported through our integration with the OSPool so far:

Links: Projects We've Supported

Summer 2024

August 30th, 2024 (BGSC/BOSE Maintenance Week)

BGSC: WebMO - New Login Question

WebMO has been updated to ask users before logging in if they are going to be using it for a specific course, or for research. The HPC Admin Team uses this to better allocate resources for each of the courses and improve tracking and statistic reporting.

This change will not impact how you use WebMO beyond the login page, and it has no ties to the group system in WebMO.

BOSE/BGSC: New Commands - myaccounts, myqos, myquota

Several new tools have been created to improve reporting on the usage of the cluster and the settings applied to someone's account.

myaccounts- Shows which accounts/groups you are part of, which is used with reservations, quality-of-service settings, and usage tracking.myqos- Reports what quality-of-service settings are applied to your account, such as number of nodes or GPUs you can use at any one time. Refer to our user policy for more information on restrictions.myquota- Reports how much disk space you are using in your home directory on the cluster. Future changes will include group reporting.

BOSE/BGSC: New Command - myjobreport - Report job statistics

A big part of working in a computing environment is understanding how well your job uses the resources you originally requested. By knowing this, you'll be able to make more informed decisions about future runs of your jobs and can adjust accordingly.

To help with this process, we created a new command myjobreport JOBID that you can use to run a report on your jobs, especially ones that completed or failed, and learn about their CPU and memory utilization. Also available in the report is links to our metrics server to see real-time statistics, even for jobs that are still actively running.

For those that turn on Slurm's email notifications, we've integrated the report right in the body of the email so you'll receive a copy every time an email is sent.

myjobreport Example

Command:

---------------------------

JOB DETAILS

---------------------------

Cluster: BOSE

Job ID: 93008

Job Name: flex-test-64Core

State: COMPLETED (exit code 0:0)

Account: uwec

Submit Line: sbatch submit-64core.sh

Working Dir: /data/users/myuser/qchem-job

---------------------------

JOB TIMING

---------------------------

Submitted At: August 29, 2024 at 3:29:55 PM CDT

Started At: August 29, 2024 at 3:29:55 PM CDT

Ended At: August 29, 2024 at 4:04:39 PM CDT

Queue Time: 0-00:00:00 (0 Seconds)

Elapsed Time: 00:34:44 (34 Minutes 44 Seconds)

Time Limit: 7-00:00:00 (7 Days)

---------------------------

REQUESTED RESOURCES

---------------------------

Partition: week

Num Nodes: 1

CPU Cores: 64

Memory: 224.00G

Num GPUs: N/A

---------------------------

RESOURCE USAGE

---------------------------

Ran On: cn54

CPU Utilization: 45.73% - 16:56:38 Used / 1-13:02:56 Max

Memory Utilization: 5.68% - 12.73G Used / 224.00G Max

Resource usage is approximate and is only captured in Slurm at a set interval. Use the metrics dashboards below for additional and more real-time reporting.

Jobs that only take a few seconds may not report utilization.

---------------------------

METRICS

---------------------------

Copy the URLs below into your browser to see real-time stats about CPU and memory usage throughout your job's running time. You must be on campus or connected to the VPN to access the pages.

cn54 | https://metrics.hpc.uwec.edu/d/aaba6Ahbauquag/job-performance?orgId=1&theme=default&from=1724963394400&to=1724965479600&var-cluster=BOSE&var-host=cn54&var-jobid=93008&var-rawjobid=93008&var-gpudevice=nvidia0|nvidia1|nvidia2

---------------------------

USING ARRAYS?

---------------------------

Support for arrays is currently limited in this report and is still being worked on. To see statistics for each individual task, you can run "myjobreport jobid_tasknumber".

Have any questions about this job? Running into an issue and would like some advice? Send this report to BGSC.ADMINS@uwec.edu and we'd be glad to assist you.

Analysis:

By looking at the above report, you can see that this job only used around 45.73% of the requested CPU, and 5.68% of the memory. Going forward, I should look to see if this job can better utilize CPU to its fullest, and drastically reduce how much memory I need.

BOSE: CUDA 12.6

CUDA version 12.6 has been rolled out on all GPU nodes and the toolkit is available under the cuda/12.6 module. Any existing Python libraries and scripts may need to be updated to take full usage of new features.

BOSE: Re-implemented Power Saving

If you use savail and notice that some idle nodes show "idle~", this means the node is currently under a power saving mode to reduce our power consumption. Initially implemented in 2023, this feature ended up being disabled due to problems with some nodes no longer reliably coming back online automatically. This has now been fixed!

How it works is that after nodes are idle for a set period of time, they'll automatically power down until they are needed again. Once a job is submitted that needs access to those nodes, they'll automatically power back on and become ready to be used by that job.

Note: Nodes with limited resources such as GPU and high memory are never powered off, and a set amount of compute nodes will always remain online.

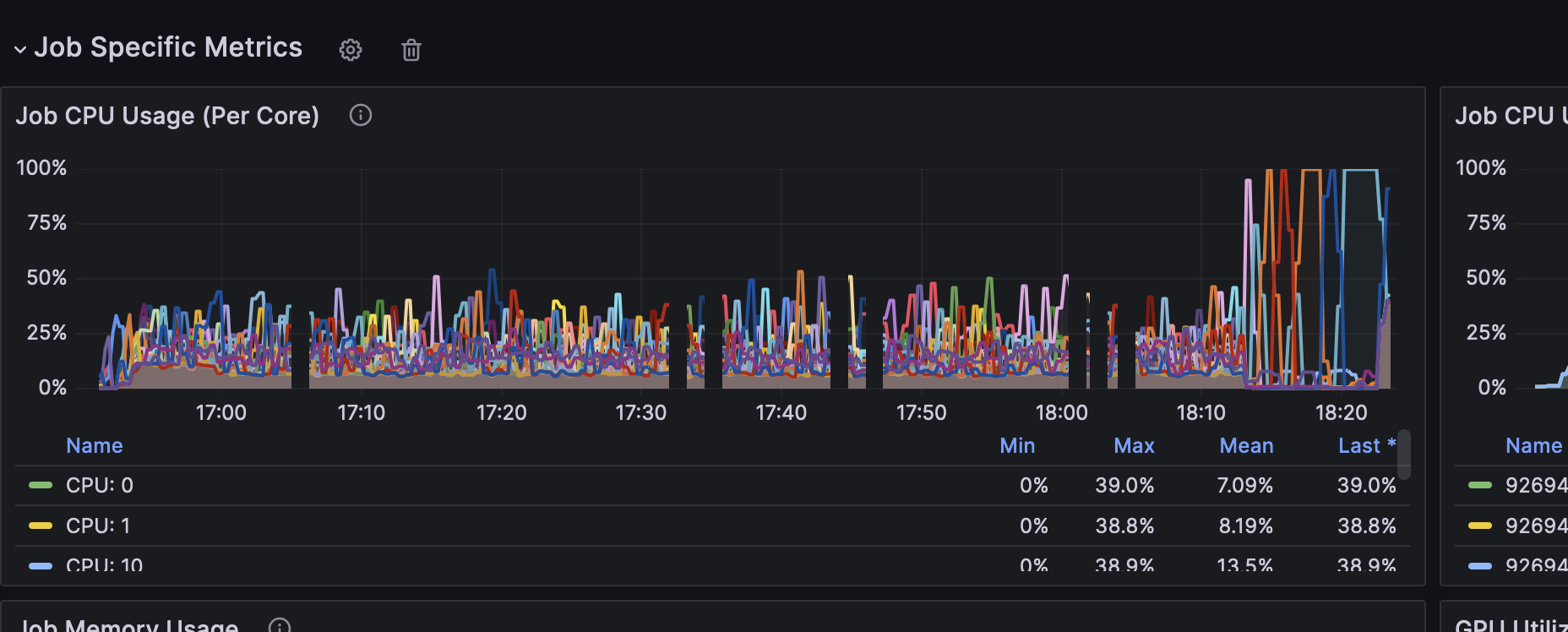

Metrics: Per-Core Statistics

Both clusters have been updated to report on CPU usage on a per-core level when previously only average was displayed. A link to a job's real-time metrics can be found when running the new myjobreport JOBID command.

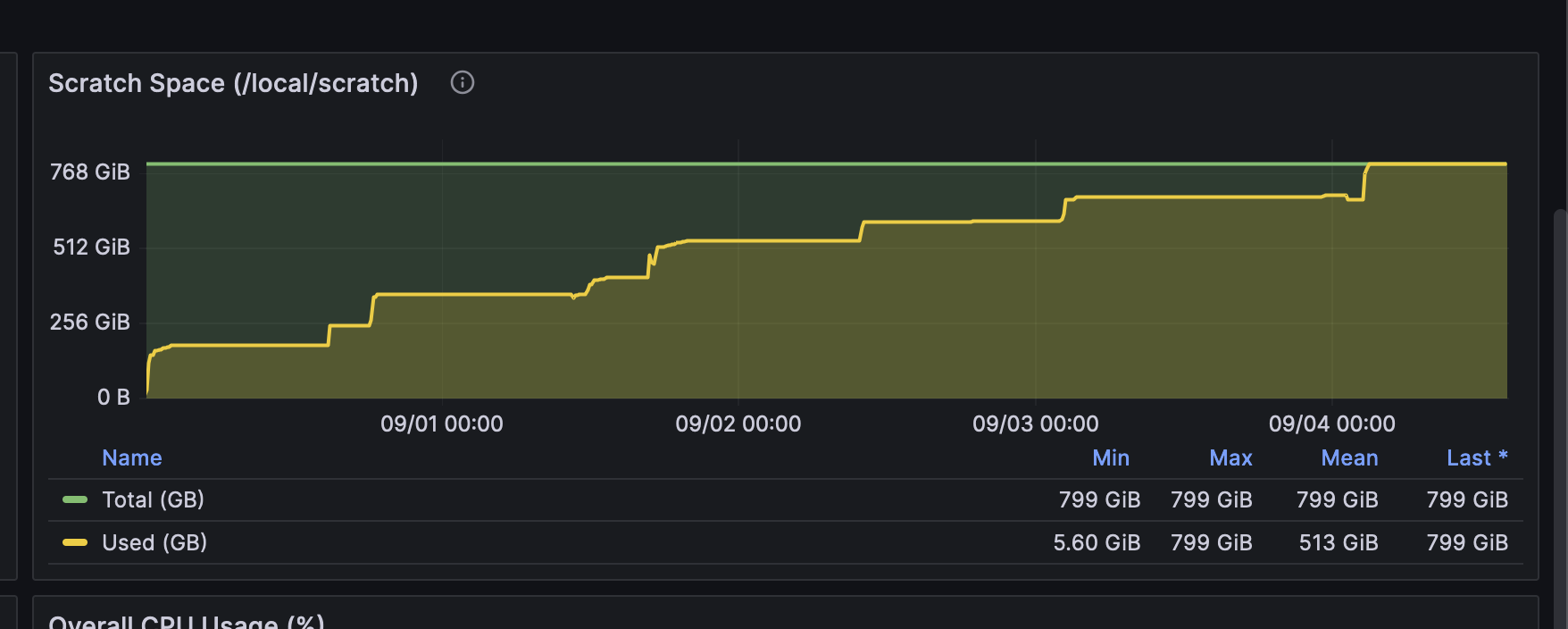

Metrics: Scratch Space Usage (Total Node)

Both clusters have been updated to report on how much scratch space is used / available on a compute node under the "Total Node Metrics" section of a job performance report. Note that the space reported includes all jobs running on the node, and may not reflect just your own job. A link to a job's real-time metrics can be found when running the new myjobreport JOBID command.

OnDemand: Visual Studio Code - New Form Fields

The request form for Visual Studio Code has been updated to support requesting additional resources, such as CPU cores, memory, and GPUs. This allows it to now be used for more than just simple code editing.

Other Changes

- BGSC - Updated WebMO to report to Slurm which user submited a job (no more manual spreadsheet work!)

- BOSE - Updated firewall firmware

- BOSE - Updated firmware of all nodes

- BOSE - Updated and completely reworked Slurm's set up to prepare for future changes to the underlying operating system.

- BOSE - Fixed machines not properly connecting to the network on boot (very much needed!)

- BOSE - Completed initial prep work to support OSG/OSPool. Announcement will be made upon full rollout.

Spring 2024

March 27th, 2024

Slurm Job - Node Login

We've implemented a new command to simplify the ability for users to log into nodes that their jobs are running on, which is useful when you want to see how your job is doing, such as with GPU usage. Previously, you could use ssh nodename, but it doesn't fully work when a user is running multiple jobs on a single node.

myjoblogin 12345 # Log into the node for job 12345

myjoblogin 12345 node01 # Log into a specific node for job 12345 (useful when a job spans multiple nodes)

If you don't know your job's ID, you can run myjobs or squeue -u $USER to see a list of your running jobs.

You can also use srun --jobid=12345 --pty /bin/bash, but this command will make it a bit easier to remember.

March 18th, 2024

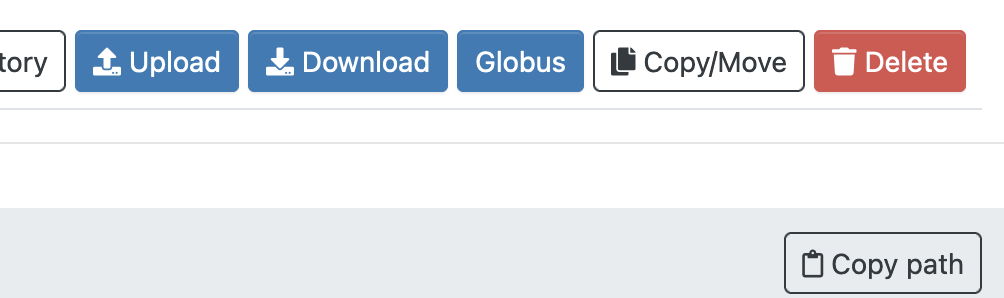

OnDemand - Globus Integration

Open OnDemand was updated to the latest release, which brought a few useful features for us to further customize our portal. One highlight for our cluster users is the integration of Globus into the File Manager. Now, researchers who have Globus set up are able to navigate to a directory in the file manager and click a button to jump to that same directory on Globus.org.

Links: Using Globus On BOSE

Winterim 2024

January 5th, 2024

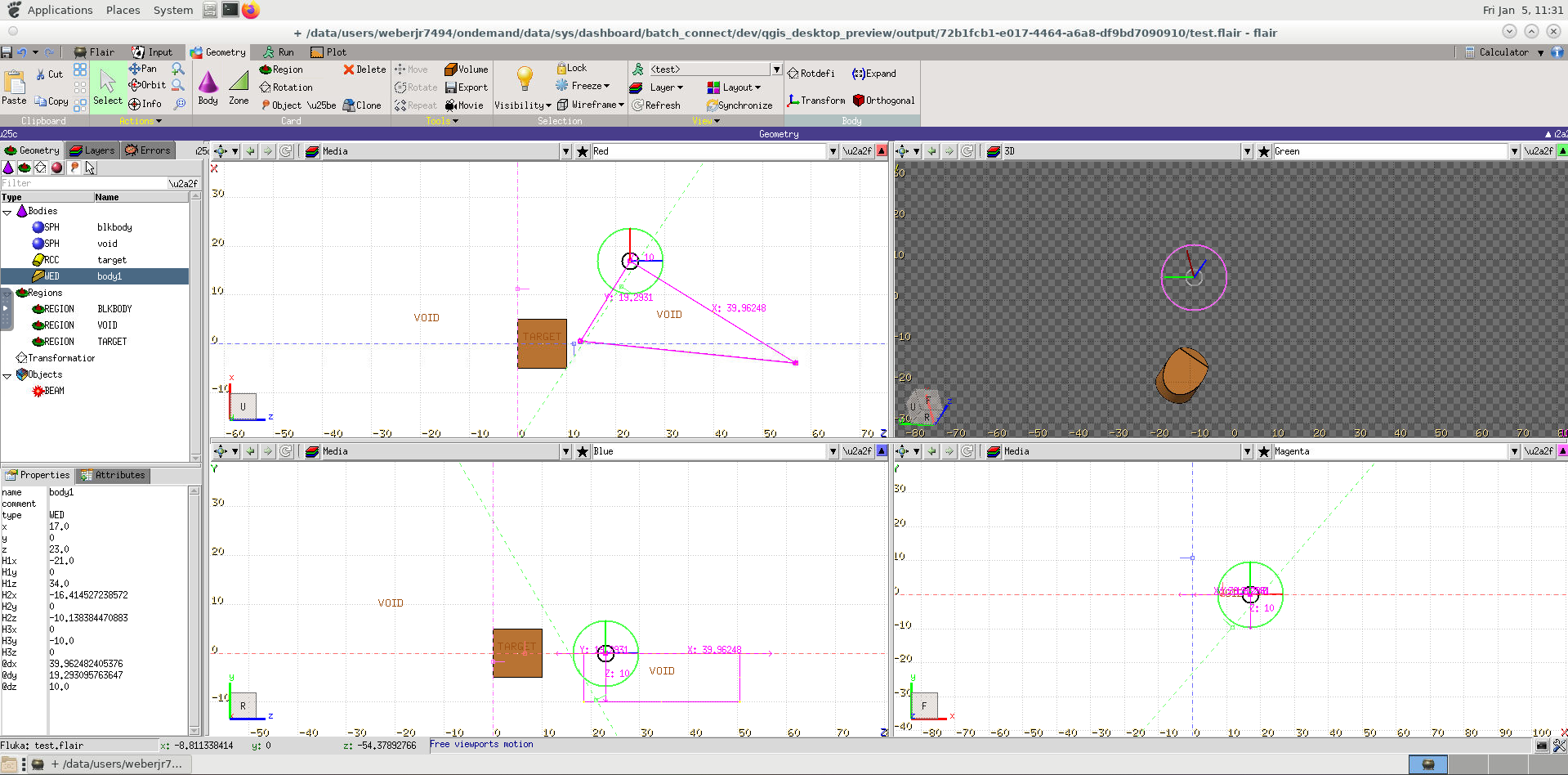

OnDemand: Flair (Desktop) - Preview Version

Now available on Open OnDemand is the ability to start up your own version of Flair. While still in development and testing, please let us know how it's working for you. Once we receive enough feedback that everything is working fairly smoothly, we'll officially release the new app.

Now available on Open OnDemand is the ability to start up your own version of Flair. While still in development and testing, please let us know how it's working for you. Once we receive enough feedback that everything is working fairly smoothly, we'll officially release the new app.

To Access:

- Launch Open OnDemand: https://ondemand.hpc.uwec.edu

- Click on "Flair Desktop (Preview)" on the homepage OR click "Interactive Apps --> Flair Desktop (Preview)".

Flair is also available as a module, which will let you load it manually in Desktop Mode.

- Launch Open OnDemand

- Click on "Desktop" on the homepage OR click "Interactive Apps -> Desktop"

- Once launched, open up the terminal (black icon top of window)

- Load the Flair module and run the 'flair' command

January 2nd, 2024

BOSE: Four New Nodes - cn54, cn55, cn56, and lm03

Thanks to a grant by Dr. Wolf in the Physics + Astronomy department, we are excited to expand the BOSE cluster for the first time by adding four new computational nodes + networking gear. Three are standard compute nodes with 256GB of RAM available under the 'week' partition, while one is under the 'highmemory' partition with 1TB of RAM. Click the link below for a full updated list of BOSE's hardware specifications.

Links: BOSE Hardware Specs

Fall 2023

December 20th, 2023

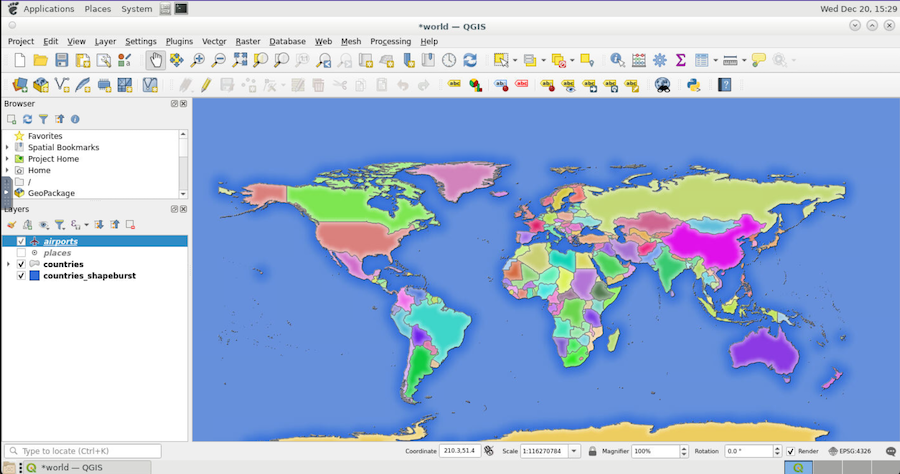

OnDemand: QGIS (Desktop) - Preview Version

Now available on Open OnDemand is the ability to start up your own version of QGIS. While still in development and testing, please let us know how it's working for you. Once we receive enough feedback that everything is working fairly smoothly, we'll officially release the new app.

Now available on Open OnDemand is the ability to start up your own version of QGIS. While still in development and testing, please let us know how it's working for you. Once we receive enough feedback that everything is working fairly smoothly, we'll officially release the new app.

To Access:

- Launch Open OnDemand: https://ondemand.hpc.uwec.edu

- Click on "QGIS Desktop (Preview)" on the homepage OR click "Interactive Apps --> QGIS Desktop (Preview)".

QGIS is also available as a module, which will let you load it manually in Desktop Mode.

- Launch Open OnDemand

- Click on "Desktop" on the homepage OR click "Interactive Apps -> Desktop"

- Once launched, open up the terminal (black icon top of window)

- Load the QGIS module and run the 'qgis' command

December 19th, 2023

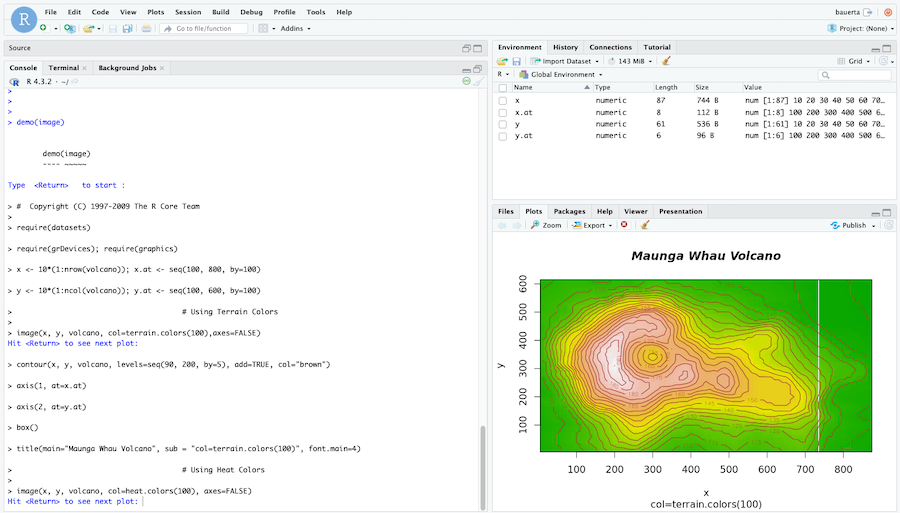

OnDemand: RStudio Server - Preview Version

Now available on Open OnDemand is the ability to start up your own version of RStudio Server. While still in development and testing, please let us know how it's working for you. Once we receive enough feedback that everything is working fairly smoothly, we'll officially release the new app.

Now available on Open OnDemand is the ability to start up your own version of RStudio Server. While still in development and testing, please let us know how it's working for you. Once we receive enough feedback that everything is working fairly smoothly, we'll officially release the new app.

To Access:

- Launch Open OnDemand: https://ondemand.hpc.uwec.edu

- Click on "RStudio Server (Preview)" on the homepage OR click "Interactive Apps --> RStudio Server (Preview)".

December 12th,2023

BOSE: Apptainer Support

Now available on BOSE is support for the Apptainer (formerly Singularity) program, which allows researchers to take advantage of container-based programs for their jobs. This introduces the idea of using isolated environments, such as those offered with Docker, for researchers to run and build the same programs on both their own computer and the cluster with minimal changes.

September 6th, 2023 (BGSC Maintenance Week)

BGSC - Limited User Directory Access ("/data/users")

For security, users are no longer able to go to “/data/users” and view a list of all the accounts on the cluster. Now, if someone wants to go to another user’s home directory, they are required to know the username of the person.

To go back to their own home directory, users can use “cd” by itself or type “cd ~”.

BGSC - Scratch Management

Temporary scratch space (anything that uses “/local/scratch” or “/network/scratch”) has been standardized and updated to automatically delete files whether a job is successful or fails. We’ve noticed several jobs that create temporary files, but don’t delete them once their program crashes which results in space no longer being available for other jobs.

Local Scratch:

- Location: /local/scratch/job_XXXXX

- Submit your job as normal using ‘sbatch’.

- You can specify how much scratch space you want to use (honor system – not enforced) by adding the SBATCH setting “--tmp=<space>”

- Example 1: #SBATCH --tmp=400G # Requests 400G of scratch space.

- Example 2: sbatch --tmp=400G script.sh

- This is beneficial when submitting multiple large-scratch jobs.

Network Scratch:

- Location: /network/scratch/job_XXXX

- Set the environment variable “USE_NETWORK_SCRATCH=1” before you submit your job.

- USE_NETWORK_SCRATCH=1 sbatch [..]

- This is necessary as the automatic script runs before jobs start.

Gaussian and Q-Chem have been updated to use these new folders.

Running other programs? The new environment variable “$USE_SCRATCH_DIR” has become available which points to your scratch space.

Note: If you use a different scratch folder other than the two listed above, your files will NOT automatically be deleted.

BGSC - New Command - sinteract

In Short: The new command ‘sinteract’ will open an interactive session on a compute node.

Instead of using sbatch, sometimes our users want to manually type their commands for testing or just to simply see the output in real time. By using the new command ‘sinteract’, users will be logged into one of our compute nodes with their requested resources.

Commands:

You can request any resources needed (CPU Cores, Memory) using arguments for ‘salloc’, which is like those in ‘sbatch’.

salloc Guide: https://slurm.schedmd.com/salloc.html

BGSC - Added new classes directory

In Short: Classes will now use /data/classes instead of /data/groups.

Going forward, any class that uses BOSE will now use a new folder under “/data/classes” instead of tied to a faculty member’s research group folder in “/data/groups”. This folder is available on all compute nodes and in OnDemand. This allows us to bypass any future disk quotas, standard the folder structure for all classes, and make it easier for students to access their past files. These folders will also appear in OnDemand as a bookmark, which is documented below.

New Structure: /data/classes/YYYY/<term>/<classcode>

Example: /data/classes/2023/fall/cs146

BGSC: Seff - Updated Command

The 'seff' command has been updated to show Grafana links for users to easily see real-time metrics (CPU usage, memory) of recent and currently running jobs. This can be tried out by typing "seff jobid" on the BGSC login node.

BOSE: Seff - Updated Command

The Grafana integration in the 'seff' command has been updated to link to the new centralized HPC metrics site, where everyone can view metrics for both BOSE and BGSC in one central location.

Links: HPC Metrics

Links: HPC User Guide | Official Documentation

Summer 2023

August 30th, 2023 (BOSE Maintenance Week)

BOSE - Node SSH Restrictions

Initially implemented earlier in the summer, we have finished rolling out a system that prevents users from logging into compute nodes that they are not actively running jobs on. This is both a security measure and resource protection system to ensure that any scripts running are tied to jobs on our cluster and are terminated upon job completion. If a user logs into a compute node they are running a job on, it'll automatically tie any command/scripts to the most recent Slurm job submitted by the user on that node.

BOSE - Login Node Resource Restrictions

To improve performance of our login node after noticing users running scripts outside of Slurm, we have implemented limitations to restrict users to around a max of four cores ("400% CPU Usage'). We recommend running all programs and scripts, including compiling software, on a compute node through either using “sbatch” or interactively with “sinteract”.

BOSE - Limited User Directory Access ("/data/users")

For security, users are no longer able to go to “/data/users” and view a list of all the accounts on the cluster. Now, if someone wants to go to another user’s home directory, they are required to know the username of the person.

To go back to their own home directory, users can use “cd” by itself or type “cd ~”.

BOSE - Scratch Management

Temporary scratch space (anything that uses “/local/scratch” or “/network/scratch”) has been standardized and updated to automatically delete files whether a job is successful or fails. We’ve noticed several jobs that create temporary files, but don’t delete them once their program crashes which results in space no longer being available for other jobs.

Local Scratch:

- Location: /local/scratch/job_XXXXX

- Submit your job as normal using ‘sbatch’.

- You can specify how much scratch space you want to use (honor system – not enforced) by adding the SBATCH setting “--tmp=>space<”

- Example 1: #SBATCH --tmp=400G # Requests 400G of scratch space.

- Example 2: sbatch --tmp=400G script.sh

- This is beneficial when submitting multiple large-scratch jobs.

Network Scratch:

- Location: /network/scratch/job_XXXX

- Set the environment variable “USE_NETWORK_SCRATCH=1” before you submit your job.

- USE_NETWORK_SCRATCH=1 sbatch [..]

- This is necessary as the automatic script runs before jobs start.

Gaussian and Q-Chem have been updated to use these new folders.

Running other programs? The new environment variable “$USE_SCRATCH_DIR” has become available which points to your scratch space.

Note: If you use a different scratch folder other than the two listed above, your files will NOT automatically be deleted.

BOSE – Addition of a development node (dev01)

In Short: A new “develop” partition was created to give users access to development header libraries for compiling software.

To support users that compile their own software, we have implemented a new partition “develop” that brings users to the new specialized node “dev01”. Built the same as other nodes in almost every way, dev01 has been customized to allow admins to install any development header libraries requested by our users, such as those that need “x11-devel”. These libraries will in most cases only be available on the development node.

If you need any development libraries installed for your software, let us know and we’ll get that in place.

To use this node, use the “develop” partition (#SBATCH --partition=develop), or use the newly created “sdevelop” command (see below).

BOSE - New Commands - sinteract, sdevelop

In Short: Two new commands ‘sinteract’ and ‘sdevelop’ will open an interactive session on a compute node.

Instead of using sbatch, sometimes our users want to manually type their commands for testing or just to simply see the output in real time. By using the two new commands ‘sinteract’ and ‘sdevelop’, users will be logged into one of our compute nodes with their requested resources.

Commands:

sinteract # Defaults to 1 core for 8 hours

sdevelop # Defaults to 16 cores for 8 hours and uses the ‘develop’ partition

You can request any resources needed (CPU Cores, Memory) using arguments for ‘salloc’, which is like those in ‘sbatch’.

Links: Slurm: salloc Guide

BOSE - Globus Support

In Short: We now support Globus file transfers!

We’re happy to report that we now officially support Globus, which is a service that allows researchers to transfer large files between not only their computer and BOSE, but also to clusters at other supported institutions. Instructions are still in the works, but you can now use the following two collections:

- UWEC – BOSE Home Directories (“/data/users”)

- UWEC – BOSE Group Directories (“/data/groups”)

The Globus Connect Personal program has also been made available in the Software Center and Self Service on all University-owned Windows and Macs on campus.

Links: Globus Website

BOSE - Power Saving

In Short: Compute nodes will now automatically power down when idle for a period of time.

To hopefully nobody’s surprise, BOSE uses up quite a bit of power to operate. To help reduce our power usage during low-usage times, we have implemented a new system that will automatically shut down compute nodes that have been idle for a period of time. If a job starts that needs one of these compute nodes, they’ll automatically start back up for the job to use them. This does mean that some jobs may be delayed a few minutes to start while the machines power up. To see the current status of a compute node, if you look at “sinfo” or “savail” and see “idle~” that means the node is currently in power saving mode and will automatically start up as needed. GPUs, high memory, and the new development node will not go through power saving at this time due to their limited availability already.

BOSE - Added new classes directory

In Short: Classes will now use /data/classes instead of /data/groups.

Going forward, any class that uses BOSE will now use a new folder under “/data/classes” instead of tied to a faculty member’s research group folder in “/data/groups”. This folder is available on all compute nodes and in OnDemand. This allows us to bypass any future disk quotas, standard the folder structure for all classes, and make it easier for students to access their past files. These folders will also appear in OnDemand as a bookmark, which is documented below.

New Structure: /data/classes/YYYY/<term>/<classcode>

Example: /data/classes/2023/fall/cs146

OnDemand - Updated to latest version

In Short: Open OnDemand has been updated to the latest version.

While this won’t impact our users too much, there will be minor changes throughout Open OnDemand that users may notice. The main benefit for upgrading Open OnDemand is that it gives the admin team more flexibility to customize the tools to fit our needs.

Links: OnDemand Release Notes

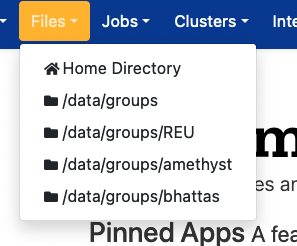

OnDemand – Updated files bookmarks

In Short: The file bookmarks have been overhauled with new formatting and the addition of the new class folders.

There are two primary changes that have been made to the bookmark feature under the “Files” dropdown in OnDemand as well as the left side when you are in the file browser.

Addition of “/data/classes”:

- To go along with the new /data/classes folder structure that was added to BOSE, OnDemand has been updated to show these folders in the following naming convention:

- Class: CODE## (Term YYYY)

- Example: Class: CS146 (Fall 2023)

- The full path is still displayed off to the right.

Changing how “/data/groups” is displayed:

- To match the display of the class folders, the group folders have also been changed to appear as:

- Group: username

- Example: Group: bauerta

- The full path is still displayed off to the right.

OnDemand – Enabled Support Tickets

In Short: A new feature of OnDemand “Support Tickets” has been enabled.

Simply put, this is just another way for our users to contact us when they have any issues with their jobs. Same as emailing us, the main benefit of this feature is that users can click “Submit support ticket” on an interactive job when there are problems which will automatically include additional details that the HPC Team can reference. Submitting support tickets is also available in the “Help” dropdown in the top right of the window.

June 26th, 2023

BOSE - Upgraded Compute Node - 1TB RAM

Compute node cn54 has been upgraded to support 990GB of RAM and renamed to lm02. This node is now part of the 'highmemory' partition and removed from the normal week/month Slurm partitions. This increases our support for high memory jobs from one node to two nodes, with a third one antipating to be supported by the end of summer.

May 30th, 2023

BOSE - Increased Memory

All jobs using the standard compute nodes (cn##) by using the week or month partitions vare now able to request up to 245GB of memory. This was previously limited to 200GB.

Spring 2023

May 17th, 2023

BOSE/BOSE - New Software - Rclone

Rclone is a command-line tool that allows for syncing files to a variety of cloud-based services online, such as OneDrive, Google Drive, and Dropbox. By using this command, you could backup your research files to an off-site service.

It can be enabled by first doing "module load rclone" and set up by doing "rclone config". View the documentation below for service-specific instructions.

Links: Rclone Documentation

April 29th, 2023

BOSE: Seff - Updated Command

The 'seff' command allows users to see their job's memory and CPU efficiency upon job completion. This command has now been extended to show Grafana links to easily view real-time metrics of not only past jobs, but actively running ones as well.

April 19th, 2023

OnDemand: Visual Studio Code - Preview

A preview version of Visual Studio Code has been added to Open OnDemand. This is a popular code editor that supports syntax highlighting, custom extensions, and has a built-in terminal to commands. After further testing and modifications, we'll make an announcement and officially release it.

Links: VS Code Website

March 20th, 2023

OnDemand: Jupyter - Memory Usage

Built within Jupyter is now the ability to see your memory usage across all of your notebooks in the upper right corner when editing a notebook. This'll allow everyone to make more informed decisions on how much memory they are using, and how much is left before they hit the "out of memory" error.

Feburary 2nd (BOSE Maintenance Week)

BOSE: Slurm - Default Email Domain

Specifying "#SBATCH –mail-user" is no longer required when you use "#SBATCH –mail-type" to send emails to your UWEC account. Now, by default, if you do not specify an email address Slurm will automatically send an email based on who submitted the job.

The greatest benefit to this is that there is one less change when you share your Slurm scripts with others in your class or research group.

You can, however, still specify the email address if you wish, especially if you have an alternative non-UWEC account you want emails sent to.

(This is the same change as what was made to BGSC previously)

BOSE: Slurm - Command Autocompletion

Slurm commands now have the ability to automatically suggest keywords, options, parameters, and more by pressing tab twice. Try it out!

Examples:

sbatch –<tab><tab> # Show all parameters for 'sbatch'

srun –exclude=<tab><tab> # Shows list of nodes you can exclude from

BOSE: Various Software Updates

Collection of various software / firmware updates that may be of some use.

- CUDA: 11.7.4 –> 11.8

- Slurm: 22.05.3 –> 22.05.8

- OpenMPI: Added 4.1.4

BOSE: New/Updated Commands

| Command | Description |

|---|---|

| myjobs | Replaced the original command with a brand new script that shows a list of all of your own jobs broken down by pending vs running. This also provides an easier way to see how much time is remaining for a job before it times out. Note that there are additional plans in the works for this command to make tweaks and expand it's feature set. Originally an alias for "squeue -au |

OnDemand: Jupyter - Updated Form

The Jupyter request form has been updated to use the previous version that's been in the works over the past several months. It now features:

- Improved instructional text

- Accounting Group - Choose which account is the notebook for (research vs class)

- Working Directory - Choose where to start Jupyter Notebook, which is needed for notebooks in /data/groups

OnDemand: Desktop Mode - Implemented on all nodes

Up until now, the ability to use the desktop feature on a node was set up on only a small portion of nodes. Now, every compute and GPU-based node on BOSE has the necessary support to utilize the desktop mode feature. This is a wonderful way to run GUI-based programs that previously had to run through X11. You can use this by going to Interactive Apps > Desktop, which runs through the same Slurm system as any other job.

OnDemand: Help Navigation Changes

Small changes were implemented under the "Help" dropdown in the top navigation, such as relabeling the documentation link to reference our wiki and adding a link to our new metrics site.

OnDemand: New File Bookmarks

Navigating to your shared group files can be a pain sometimes, so that's we've improved the default bookmarks in the Files app to automatically detect what groups you are in for quick and easy access.

In addition to automatically adding your groups, we also implemented the ability to choose your own if you want for greater flexibility. Create/edit one of these files:

- ~/ondemand/file_paths_add <– Add more paths to the list

- ~/ondemand/file_paths_override <– Completely override / replace the automatically generated list of groups

In one of those files, you can place one path per line, but note that the path //must// exist and it //must// be the full absolute path. Due to current limitations, you cannot use "~" to refer to your home directory. Once you update the file, in OnDemand either log in again or click "Help –> Restart Web Server".

OnDemand: Active Jobs - CPU & Memory Metrics

The Active Jobs app (Jobs –> Active Jobs) now shows you the CPU and Memory graph for a running job. You can view this, along with other details about your job, by clicking the arrow on the left side next to your Job ID.

This is hooked into the Grafana system that we use for metrics across the entire cluster.

Metrics: Integrated With BOSE Statistics

During our BGSC maintenance period at the beginning of January 2023, we set up a new metrics server to change BGSC to start using Grafana. During BOSE's maintenance period, we updated the metrics site to be a single place to view stats for BOTH servers rather than each having their own site.

This project is still ongoing as we continue to make new dashboards and screens that anyone can use to see the state of the supercomputing clusters. There is especially work to be done to include more dashboards from BOSE's own metrics site.

Links: Metrics Site (BGSC + BOSE) | Original BOSE Metrics | Grafana Website

Fair Share / Policy Changes

As usage of the cluster changes, we also must change how the cluster is used to ensure fair and balanced access to anyone who wants to take advantage of our computing resources. Big thanks to our three faculty coordinators for their collaboration on updating our user policies.

- BOSE: New users are restricted to a single node and GPU card until training is complete

- BOSE: Research groups are limited to 75% (40ish) compute nodes at any one time across all jobs

- BOSE: Users are limited to three GPU cards at any one time across all jobs

- BGSC: Jobs are restricted to only use a single node (please use BOSE if you require more than one)

Winterim 2023

January 6th, 2023 (BGSC Maintenance Week)

BGSC: Switch to Rocky Linux

Operating System: CentOS 8.X –> Rocky Linux 8.7

Due to CentOS 8 reaching end-of-life, and changes to how CentOS operates, we have switched our operating system to Rocky Linux 8.7 in line with the general computing industry. Since both CentOS and Rocky Linux are built off the same underlying Red Hat Enterprise Linux (RHEL), there should only be minimal-to-no differences in how your scripts function.

Links: CentOS 8 EOL | Changes to CentOS | Rocky Linux

BGSC: Grafana Integration

To modernize our server metrics and cluster monitoring, we have switched away from the legacy Ganglia in favor of using Grafana instead. This'll give us greater capabilities in collecting information about Slurm and node performance and present them in our own customizable dashboards for easy consumption and understanding.

This project is ongoing with several new changes and tweaks and we will eventually integrate BOSE's own Grafana installation into a single source for all HPC-related metrics.

Links: BGSC Metrics | BOSE Metrics | Grafana Website

BGSC: Increase User Storage / Decrease Network Scratch

Due to space limitations on the cluster and low use of the network scratch, we have opted to move 5TB from /network/scratch and make it available for user and group data instead. This'll allow us to accomodate more users and provide more flexibility with projects that require more space than the typical 500GB limit.

BGSC: New Scratch Paths

For consistency with BOSE's setup, and to allow easier understanding on where scratch space is, we have implemented two new folders you can use in your scripts. This'll make it easy to differentiate between local scratch versus network scratch for easy memorization.

Local Node Scratch: /local/scratch (points to /node/local/scratch)

Network Scratch: /network/scratch (points to /data/scratch)

We will continue to have the original paths available for backwards compatibility with no plans to remove them at this time.

BGSC: Slurm - Default Email Domain

Specifying "#SBATCH –mail-user" is no longer required when you use "#SBATCH –mail-type" to send emails to your UWEC account. Now, by default, if you do not specify an email address Slurm will automatically send an email based on who submitted the job.

The greatest benefit to this is that there is one less change when you share your Slurm scripts with others in your class or research group.

You can, however, still specify the email address if you wish, especially if you have an alternative non-UWEC account you want emails sent to.

BGSC: New/Updated Commands

| Command | Description |

|---|---|

| scancel |

Updated to ask for confirmation before canceling a Slurm job. |

| jobinfo |

Alias for 'scontrol show job |

| myjobs | Replaced the original command with a brand new script that shows a list of all of your own jobs broken down by pending vs running. This also provides an easier way to see how much time is remaining for a job before it times out. Note that there are additional plans in the works for this command to make tweaks and expand it's feature set. Originally an alias for "squeue -au |

| seff |

Not a new command, but never previously announced. This command allows you to see the general efficiency of a completed job to show its CPU and memory usage. It's not 100% accurate, but it's a good starting point for making future resource decisions. |